In his 15-year career as a reporter, Eric Litke has come to expect a certain number of angry emails and social media messages from people who are incensed by his stories.

“That always comes with the trade,” he said.

But since he started fact-checking for USA TODAY and PolitiFact Wisconsin — a partnership between the Milwaukee Journal Sentinel and the nonprofit organization PolitiFact — he’s sensed a shift in the public perception of his work. He’s always shared the stories he’s most proud of on his social media accounts, but the abusive messages he receives have become more personal over the past three years, and increasingly come from people in his own orbit.

Despite striving for objectivity, his fact-checks — some of which help Facebook moderate its content — seem to prompt irrational and highly emotional reactions. He recently had a longtime friend tell him on Facebook that he “used to do work that mattered.”

“The tenor of the comments in response to that is very different when I’m doing something investigative versus when I’m doing something in the fact-checking role,” Litke said. “Now there’s this instinctual reaction where people are not interested in critically engaging with the arguments and the data and the critical thinking behind it. They’re just looking at, ‘OK, you rated this guy in this way, therefore you are scum of the earth, or you’re a brilliant person to be commended,’ purely based on where we landed.”

Even worse, he received a vaguely threatening email from a stranger that he and his editors decided was serious enough to alert the police.

“That hasn’t come up in my career before,” he said. “It’s kind of an indicator of where things are at, that people are willing to send off emails that reach that extreme point where you kind of go, ‘I don’t really think you’re going to show up at my house, but we’re far enough over the line that we should probably notify some people.’”

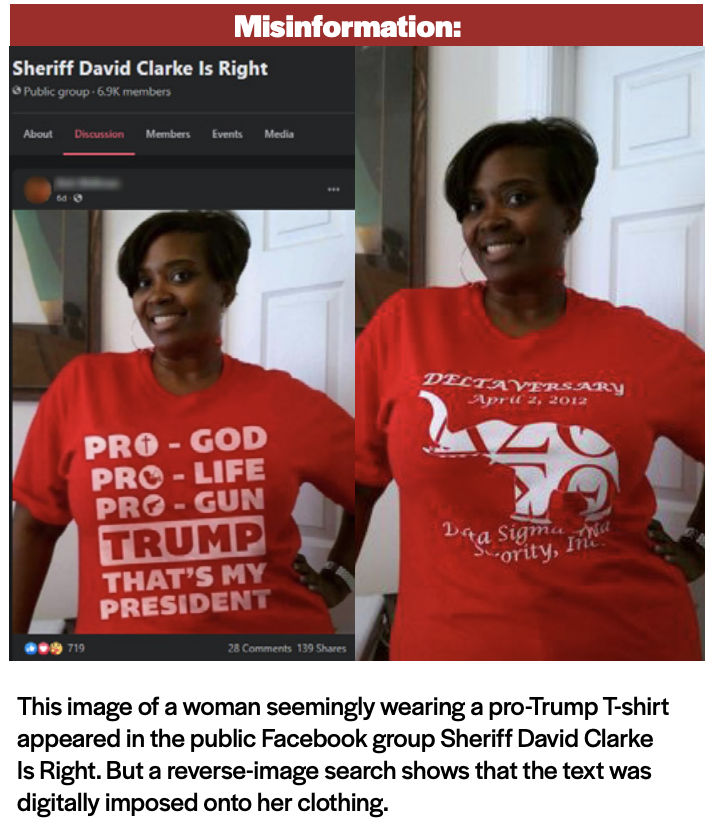

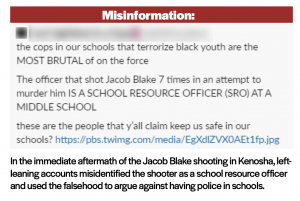

The current hostile climate for reporters causes one to consider previously far-fetched possibilities: physical threats, doxxing, digital privacy and security breaches, and malicious manipulation of photos from their social media accounts. Such attacks can represent real-life threats to reporters’ safety, limit free expression, and even force journalists to leave the industry entirely.

As objective truth has come under assault in online spaces, so have reporters — who should probably think twice about their digital security.

II. ‘Inextricably linked’: Disinformation and online harassment

Online harassment is when an individual or group targets somebody else in a severe, pervasive and harmful way. It’s an umbrella term that includes several types of tactics, including hate speech, sexual harassment, hacking and doxxing — which means spreading someone’s personal information online.

Female reporters disproportionately bear the brunt of these attacks. A recent report from the United Nations Educational, Scientific and Cultural Organization (UNESCO) and the International Center for Journalists declared online harassment as the “new front line for women journalists.” In a global survey of more than 700 women in journalism, 73% said they had experienced some form of online violence.

The respondents said they received threats of sexual assault and physical violence, digital security attacks, doctored and sexually explicit photos of themselves, abusive and unwanted messages, attempts to undermine their personal reputations and professional credibility, and financial threats. About two-fifths said their attacks were linked to orchestrated disinformation campaigns.

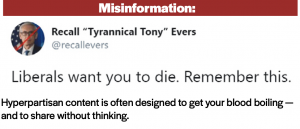

Online harassment is often “inextricably linked” with disinformation campaigns, which seek to discredit newspapers and other democratic institutions, but can also be directed at individual reporters, said Nora Benavidez, a First Amendment and voting rights advocate with PEN America, which offers online abuse defense trainings to reporters and newsrooms across the country.

“These are issues that permeate online and are used as weapons in maybe distinct ways, but their tactic writ large is to constantly sow doubt, to discredit the narratives that people see,” she said.

In both cases, the attacks can be highly coordinated, despite looking as if the victim is being bombarded at random. (The Media Manipulation Casebook is a collection of in-depth investigations that show a high level of coordination in online harassment and disinformation campaigns, including medical misinformation about COVID-19 that started metastasizing in online Black communities early in the pandemic.)

“The accurate information they’re trying to discredit comes from reputable sources. In order to do that, you have to prove that those professional reputable sources aren’t reliable — you have to discredit, intimidate and silence the reporters behind the accurate, professionally produced information that you want to undermine or dilute.”

Viktorya Vilk, PEN America

“I think of online harassment and disinformation as something like two sides of the same coin, or maybe two prongs of the same spear, right?” said Viktorya Vilk, the director of digital safety and free expression programs at PEN America. “The goal in both cases is to spread disinformation or inaccurate information to pollute the larger landscape. The accurate information they’re trying to discredit comes from reputable sources. In order to do that, you have to prove that those professional reputable sources aren’t reliable — you have to discredit, intimidate and silence the reporters behind the accurate, professionally produced information that you want to undermine or dilute.”

But that doesn’t mean the two issues always go hand-in-hand. Not all online harassment is part of a campaign; sometimes it’s truly chaotic, as incensed social media users pile on to somebody who has drawn their ire.

Despite their similarities, disinformation and online harassment aren’t studied and understood the same way. Whereas much of disinformation research is devoted to uncovering networks of bad influencers, social media bots and faux new outlets, online harassment tends to get a less scientific examination, according to Benavidez and Vilk. Researchers and reporters often choose to focus on the anecdotes of individuals who have suffered acutely from being doxxed or otherwise targeted, rather than digging into the mechanisms behind the attacks.

“Because there aren’t really good forensic investigations as this stuff plays out, we don’t have a lot of evidence,” Vilk said. “There might be all kinds of coordination happening in the dark corners of the web. … But we don’t have loads of evidence to say, at scale, this is how coordinated these (harassment campaigns) are.”

III. Batten the hatches: How journalists can tighten their online presence before they’re targeted by online harassment

For local reporters, getting doxxed can feel like a far-off hypothetical scenario that only happens to national reporters or those in other countries.

“It is still a new version of harassment that is on the periphery for most people,” Litke said. “If you don’t know somebody who it’s happened to, it doesn’t feel real.”

But as mis- and disinformation creep into every aspect of public discourse, the likelihood of reporters getting targeted by abusers online increases. And preparing for the possibility of being doxxed, impersonated or otherwise targeted by online harassment is more effective than reacting to it, according to PEN America’s Online Harassment Field Manual.

Damon Scott, a reporter with the Seminole Tribune in South Florida, says that reporting on disinformation feels different than most beats. In 2020, he monitored local mis- and disinformation as a news fellow for the global fact-checking organization First Draft. He’d never taken such a deep dive into disinformation before last year and “went into it a little bit naive about what I’d end up dealing with on the day-to-day,” he said.

“I had never really sat down and analyzed misinformation like we did for the fellowship, and I wasn’t prepared for how it affected my mood and my spirit,” he said. “The whole experience was more distressing than I thought it would be. If I had to do it all over again, I would do it, but it was way more eye-opening than I thought it would be.”

He was particularly discouraged by the scale of the problem and seeing so many social media influencers with significant followings using their megaphones irresponsibly. But as a local reporter who mostly worked behind the scenes to produce newsletters about Florida-specific information disorder — he didn’t engage directly with bad actors on social media — Scott was moderately concerned about his digital security.

He tightened up his social media presence by unfriending practically everyone in his Facebook network, changing his username and approaching his account as strictly professional.

“Not that anybody in these (Facebook) groups would have known who I was before, but if they tried to track me down, they might be able to find out,” he said.

“The good news for reporters is that they’re trained to investigate things, they just never turn that on themselves,” she said. “But that’s what they need to do — they need to basically think like a doxxer and start digging into their own footprint online in order to understand what’s out there and how to get that information.”

Viktorya Vilk, PEN America

Building a support network is another effective way to be proactive. Reporters who produce solid explanatory work and become trusted resources in their online communities may be less vulnerable when they’re targeted by hackers and trolls.

“Once you have a pretty good brand, you can tell people what’s happening to you and they will come to your assistance, especially if you give them some guidance on what kind of help you would want,” Vilk said.

For example, if a reporter discovers that they are being impersonated on social media, asking their network to help them report the account to a tech company will increase the likelihood that it will quickly be removed.

“In that case it’s quite important to speak out about it,” Vilk said. “Say, ‘Hey, this isn’t me, please help me report this account. I’m being impersonated, don’t believe anything that comes out of this account.’ ”

One way for reporters to build trust within their networks is to use social media to explain and provide insights into their newsgathering process, Benavidez said.

“At the risk of sounding like it’s homework, I think tiny nuggets like that can be incredibly moving and powerful for readers,” she said. “And those can help as preemptive tools you use in the event that a disinformation campaign targets you or your newsroom.”

Another way to stay a step ahead of abusers is by tightening up one’s social media accounts and online presence, Vilk said.

“The good news for reporters is that they’re trained to investigate things, they just never turn that on themselves,” she said. “But that’s what they need to do — they need to basically think like a doxxer and start digging into their own footprint online in order to understand what’s out there and how to get that information.”

- Google yourself

Though this may seem “comically obvious,” Vilk said, get started by running your name, account handles, phone number and home address through various search engines. Start with Google, but don’t stop there. Google tailors search results to each individual user, which means a doxxer will get different results when they’re searching for your personal information. Use a search engine such as DuckDuckGo, which prioritizes users’ privacy, to break out of the filter bubble of personalized results. For an even more complete view of your online footprint, run your information through the Chinese search engine Baidu.

- Set up alerts

You can’t be expected to monitor mentions of your name and personal information around the clock — and you don’t have to. Set up Google alerts for your name, account handles, phone number and home address. At least you’ll know if your information starts circulating online.

“You might want to do it for friends and family, too,” Vilk said.

- Audit your online presence

Perhaps the most important step is tightening settings on your social media accounts so bad actors don’t have access to your personal information, or that of your loved ones. Be strategic about which accounts you’re using for which purpose, Vilk recommended. If you’re a reporter who uses Twitter to share your stories, keep up with colleagues and interact with your audience, keep it strictly professional. This isn’t the place for pictures of your cat or holidays with relatives, and it’s wise to scrub the account of potentially embarrassing tweets and photographs you forgot about. Don’t share where you live, your birthday, your cell phone number, or anything else that could be used to track you down.

“If you’re using Instagram for photos of your dog and your baby, you should set your Instagram to private and put whatever you want on there,” she said. “But it should be separate from public accounts.”

- Search for old CVs and bios

In a not-so-distant era of the internet, it was common for journalists and academic researchers to upload CVs, resumes and bios including personal information to personal websites. Search for forgotten documents that still live online and could potentially serve as goldmines for would-be doxxers.

- Don’t forget data brokers

As a reporter, you may have come across data broker websites such as Spokeo and Whitepages while tracking down hard-to-reach sources. Such websites scour the internet for personal information and sell it — giving doxxers an easy way to find a target.

As of August 2020, users can request their personal information be removed from Whitepages.com by following the steps on the website’s help page. For websites that don’t have a step-by-step protocol, you can demand via email that your personal information be removed.

If that’s too time-intensive, consider subscription services such as DeleteMe or PrivacyDuck, though the expense may be difficult for an individual reporter to cover.

- Practice good password hygiene

This goes for everyone, not just reporters: If you’re using your birthday as the same six-character password for all of your online accounts, you’re unnecessarily exposing sensitive personal information to hackers and making it far easier for somebody to pretend to be you. The longer the password, the more secure it is. Two-factor authentication is even better, and it’s wise to use a different password for every account.

IV. You shouldn’t be alone: How newsrooms can support their employees

Though they can shore up their online presence, reporters shouldn’t be left to combat the twin monsters of disinformation and online harassment alone. Many media employers, however, “appear reluctant to take online violence seriously,” according to the UNESCO report. (See PEN America’s guide for talking to employers about online harassment.)

“We all need to be doing something,” Vilk said. “Individual reporters need to be doing things. Newsrooms need to be doing more than they are, and platforms need to be equipping reporters with better tools and features to protect themselves. It’s such a massive problem, it has to be a multi-stakeholder solution.”

Newsrooms can brace for worst-case scenarios by developing policies and protocols to help staff members who are facing abuse from disinformation and discreditation campaigns.

“That sends the message that discreditation campaigns are real and the newsroom takes them very seriously,” Vilk said, “and creates a culture where reporters feel comfortable coming forward and talking internally with the institution about what’s happening to them.”

She hasn’t always been successful, but Vilk encourages newsrooms to develop an internal reporting mechanism for reporters to flag particularly egregious attacks. Then the news organization can escalate the issue to a tech company, law enforcement, or a private security company. Creating clear guidelines for what reporters should do under extreme circumstances is critical.

“Sometimes, when you’re in the middle of an attack, it’s so unsettling, frightening, and traumatic, you’re paralyzed,” she said. “So if you have a protocol you can be like ‘OK, I’m going to do this and this. I know who to talk to in my newsroom when this is happening.'”

Newsrooms can also support their reporters by subsidizing subscriptions to information-scrubbing services and providing access to mental health care and legal counsel. And finally, if a reporter is made to feel unsafe at home — perhaps they’ve been doxxed and their home address has been shared — it’s their employer’s responsibility to ensure they have a safe place to go, Vilk said.

“To be honest, a lot of this isn’t happening,” she said, “but it could and should be happening.”

Single-handedly offering such comprehensive support is out of reach for many cash-strapped newsrooms. While disinformation and online harassment campaigns represent a growing threat to the free press, many news organizations are navigating the most resource-scarce landscape they’ve ever encountered.

But Vilk sees opportunity in the way many news organizations have partnered with each other to deliver high-quality reporting during the COVID-19 era. The same spirit of partnership could apply to protecting their reporters from doxxing and harassment, she said. For example, multiple newsrooms could pitch in for a shared security specialist or internal reporting system.

“I think that’s the future,” she said. “That has to happen because I don’t think the disinformation and abuse campaigns are going to let up anytime soon.”

The Center for Journalism Ethics encourages the highest standards in journalism ethics worldwide. We foster vigorous debate about ethical practices in journalism and provide a resource for producers, consumers and students of journalism. Sign up for our quarterly newsletter here.